Camera selection based on pixel density

After security monitoring enters the high-definition era, one advantage is that the effect of the camera can be quantified by pixel density, and the security camera suitable for the requirements can be selected.

In the analog standard definition era, it is difficult for us to use specific digital standards to measure the quality of cameras in terms of 500TVL and 700TVL. Especially when we want to describe what kind of camera can see the face and distinguish the target contour, it is obviously difficult to use TVL TV line. The fundamental reason is that in the simulation era, the resolution of the camera is basically certain, and the gap is small. Especially after the camera is connected to the back-end DVR for coding, the resolution is generally CIF (352) × 288), 4cif or D1 (704 × 576), now it seems that there is little difference in the actual effect of these resolutions. We can only distinguish different camera recognition purposes from the lens focal length, which is fuzzy and unclear.

And if 80 × 80 pixels can meet the requirements of face recognition, which is very clear and intuitive. Obviously 80 × 80 pixels is a quantifiable, specific value.

Pixel density, in short, is the proportion of pixel size that can be recognized by human eye or machine vision (generally based on horizontal resolution pixels). The higher the camera resolution, the smaller the proportion of target picture pixels.

Pixel density standard

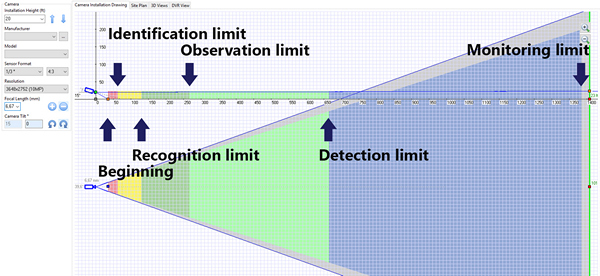

In general, we can divide the pixel density standards into identification, recognition, observation, detection, and monitoring based on the different monitoring purposes and people as the target.

- Identification , the details are sufficient to determine the identity of the target individual without any doubt.

- Recognition, to determine whether the displayed target is the same person as someone you saw before.

- Observation, you can see some of the characteristics of the individual details, such as unique clothing, whether to wear a hat, wear glasses, etc.

- Detection, can detect whether the target in the screen is a person.

- Monitoring, can monitor or control crowd targets in the monitoring screen.

Johnson Criterion

Johnson criterion is the field of infrared thermal imaging, which is used to determine the minimum resolution standard required to detect objects. John Johnson is an American military scientist who developed this method for predicting the performance of sensor systems in the 1950s. The object can be a person-usually a critical width of 0.75 meters (2.46 feet) to determine, or a car-usually a critical length of 2.3 meters (7.55 feet) to determine. Johnson examines the observer’s ability to recognize scale model targets in different situations and proposes a standard for smaller required resolutions.

The Johnson Criterion levels for infrared thermal imaging are as follows:

- When used for detection, at least 1.5 pixels are required (you can see the existence of objects).

- For identification, at least 6 pixels are required (for example, there is a person in front of the fence).

- For confirmation, at least 12 pixels are required (when an object and its characteristics can be recognized, for example, a person holds a crowbar).

Obviously, the pixel density standard of security video surveillance has borrowed and developed the Johnson criterion to better adapt to the field of high-definition network video surveillance.

UK Home Office, 2009

This belongs to the standard of the analog standard definition era, and different categories are established based on the proportion of the body height in the field of view.

| Category | Abbreviation | Operational requirements | Proportion of height-4CIF |

| Identification | Id | The details should be sufficient to determine the identity of the individual without any doubt. | 100%–150% |

| Recognition | Rec | It is highly certain whether the displayed person is the same person as someone you have seen before. | 50% |

| Detection | Det | The height determines whether someone shows up. | 10% |

Axis Standard

Drawing on the above standards of UK Home Office in 2009, Axis proposed a standard that focuses on pixel density, which is described by the number of pixels of related objects (usually human faces).

The face has significant recognition features, and the change in face width is smaller than the change in weight or fatness. The average width of a human face is 16 cm (6.3 inches). After recommendations and test results from the Swedish National Laboratory of Forensic Science, Axis chose to use 80 pixels as the requirement for face recognition in extremely harsh environments.

| Operational requirements | Horizontal pixels/face | Pixels/cm | Pixels/inch |

| Identification (bad light) | 80 pixels/face | 5 pixels/cm | 12.5 pixels/inch |

| Identification (good light) | 40 pixels/face | 2.5 pixels/cm | 6.3 pixels/inch |

| Recognition | 20 pixels/face | 1.25 pixels/cm | 3.2 pixels/inch |

| Detection | 4 pixels/face | 0.25 pixels/cm | 0.6 pixels/inch |

EU EN62676-4:2015、EN50132-7:2012

EU video surveillance systems for use in security applications. Part 4: Application guidelines (Video surveillance systems for use in security applications – Part 4: Application guidelines) (IEC 62676-4:2015, 50132-7:2012)

EU standards define the verification area as the target distance of each pixel greater than 4mm. The recognition area is that the target distance of each pixel is greater than 8mm. The target distance of each pixel in the observation area is greater than 16mm. The target distance of each pixel in the detection area is greater than 40mm. The target distance of each pixel in the monitoring area is greater than 80mm.

Above, we can convert specific numbers into PPM (pixels per meter) or PPF (pixels per foot), the number of pixels per meter or inch.

| Category | PPM | PPF | MM PER PIXEL |

| Identification(Id) | 250 | 76 | 4 |

| Recognition(Rec) | 125 | 38 | 8 |

| Observation(Obs) | 62 | 19 | 16 |

| Detection(Dete) | 25 | 8 | 40 |

| Monitoring(Monit) | 12 | 4 | 80 |

Samsung (Hanwha) standard

| Category | Value |

| Face recognition | 5.33px/cm |

| Identify details | 2.62px/cm |

| Identify objects | 1.97px/cm |

| Human body detection, license plate recognition (software) | 1.33px/cm |

| Basic monitoring | 0.66px/cm |

CHINA GB/T 35678-2017

The Chinese National Standard GB/T 35678-2017 Public Safety Face Recognition Application Image technology requires that the distance between the two eyes should be greater than or equal to 30 pixels, preferably greater than or equal to 60 pixels.

Ministry of Public Security GA/T 893, application term for security biometric recognition, letter recognition is 15 pixels (200 pixels/m), lighting conditions: facial recognition 300-500lux, license plate recognition 150lux.

At the same time, standards related to face recognition algorithms stipulate that the requirement for face recognition is 80×80 pixels.

US Department of Homeland Security 2013 (DHS 2013) recommendations

The U.S. Department of Homeland Security 2013 (DHS 2013) published recommendations on pixels per foot for different video surveillance functions in the Digital Video Quality Handbook (pages 27-28).

- Observation: 20 PPF

- Forensic review: 40 PPF

- Recognition: 80 PPF

French New Standard

The newly proposed French standard discusses different pixel densities and PPM values for detection/observation/recognition/ identification (DORI) tasks.

Detection: 30 PPM

Identification: 100 PPM

License plate reading: 200 PPM

Label recognition: 400 PPM

Application examples

The above introduces the pixel density standards applied in the security field in different countries. How to apply these standards in practice?

Taking the Axis standard as an example, for the operating requirements of a given scene, we can use numbers to determine the minimum resolution required. To Recognition people at a 10-meter-wide loading dock, we need at least 125 pixels/meter, which is a total of 1250 pixels. Therefore, a camera with a horizontal resolution of 1280 pixels is sufficient for the job.

To give another example, a customer is using a high-definition network camera to monitor an office space and wants to know the larger scene width required for identify. The camera resolution is 1920×1080 pixels, which means that the larger scene width to be verified is 1920/5 pixels/cm=384 cm.

The lower the resolution, the larger the scene width will be narrower. In larger images, the capture line will be further back, thus providing a larger verification area.

Sometimes if there are large-scale calculations, or if the calculations are too cumbersome, we can use some third-party online calculation tools. For example: JVSG, Axis’ pixel calculator, Samsung’s tool, etc.

Leave a Reply

Want to join the discussion?Feel free to contribute!